I just read a fascinating article in the July Wired magazine about creative genius. The subject, an economist named David Galenson, has correlated age with perceived value in all sorts of creative fields, and has identified 2 curves. One, which he dubs "Concepualists", tend to peak early in their careers. For example, even though Picasso lived into his 90's, his most cited works in art history and other books were done before he was 30. Mark Rothko, (one of my favorites), did his most cited work the year he died, when he was 59. Galenson calls these guys "Experimentalists". He has done this correlation over painting, fiction, economists, music, and other fields. He believes that 2 distinct flavors of genius exist: one that manifests itself early, with bold, field-changing paradigm shifts (concepualist) and another, slower, accumulated genius (the experimentalist).

This instantly applies to other fields that he hasn't studied, like physics. I've often wondered why so many brilliant, earth shattering discoveries are made by young men Newton, Einstein, and Feynman were quite young when they produced their landmark works). However, if you look at someone like Stephen Hawking, he's still producing significant work. I think this is a great topic, one that resonates with observations I've made but never correlated myself. His book is named Old Masters and Young Geniuses: The Two Lifecycles of Artistic Creativity, and it's jumping to the top of my reading list with a bullet.

Friday, August 25, 2006

Sunday, August 20, 2006

Technology Snake Oil Part 8: Service Pack Shell Game

If you could see my face, you would see shock, dumbfoundment, and disgust. It pains me to

even write about something as stupid as this, but it keeps rearing its head. The

majority of my recent clients and someone I talked to casually from another

company recently are relying on one poisonous meme, which seems to be spreading.

The very bad idea: "We never deploy anything until the first service pack is

released".

Let's think about this for a second. If a vendor produced the

most perfect software ever conceived by mankind, there would never be a service

pack, thus none of these companies would ever deploy it. On the other hand, if I

release a really stinky version of some software that requires a service pack

after a week, it now meets this unassailable standard of deployability.

Two factors have led to this smelly idea. The first is just pure laziness on the

part of the decision makers who decide when things get deployed. Regardless of

the service pack level, you should always evaluate software on its merits. A

prescription like the Service Pack Shell Game ignores important factors in

software and tries to find a metric that indicates quality. This is not even

close. When Windows NT Service Pack 1 was released, it was a disaster. Service

Pack 2 basically rolled back all the changes that SP1 wrought. That's why, to

this day, you still see software that requires NT SP3, because that was the

first real service pack that actually fixed anything.

The other reason this is happening is both more subtle and dangerous. Have we really gotten to the

point where we distrust commercial software this much? It's because vendors have

consistently released software that is not ready for prime time and told us that

it's of shipping quality. Companies even apply this selection process to open

source software now. Open source has no marketing department pushing releases

out the door. Generally, open source software ships when it is ready. Thus, most

open source has fewer "service packs" than commercial software. Yet this same

flawed prescription is often applied to it. Software, no matter what the source,

should be vetted based on it's quality, which should be determined by (as much

as possible) objective means. Choosing a random metric like "after the first

service pack" guarantees you'll get hit-and-miss quality software.

even write about something as stupid as this, but it keeps rearing its head. The

majority of my recent clients and someone I talked to casually from another

company recently are relying on one poisonous meme, which seems to be spreading.

The very bad idea: "We never deploy anything until the first service pack is

released".

Let's think about this for a second. If a vendor produced the

most perfect software ever conceived by mankind, there would never be a service

pack, thus none of these companies would ever deploy it. On the other hand, if I

release a really stinky version of some software that requires a service pack

after a week, it now meets this unassailable standard of deployability.

Two factors have led to this smelly idea. The first is just pure laziness on the

part of the decision makers who decide when things get deployed. Regardless of

the service pack level, you should always evaluate software on its merits. A

prescription like the Service Pack Shell Game ignores important factors in

software and tries to find a metric that indicates quality. This is not even

close. When Windows NT Service Pack 1 was released, it was a disaster. Service

Pack 2 basically rolled back all the changes that SP1 wrought. That's why, to

this day, you still see software that requires NT SP3, because that was the

first real service pack that actually fixed anything.

The other reason this is happening is both more subtle and dangerous. Have we really gotten to the

point where we distrust commercial software this much? It's because vendors have

consistently released software that is not ready for prime time and told us that

it's of shipping quality. Companies even apply this selection process to open

source software now. Open source has no marketing department pushing releases

out the door. Generally, open source software ships when it is ready. Thus, most

open source has fewer "service packs" than commercial software. Yet this same

flawed prescription is often applied to it. Software, no matter what the source,

should be vetted based on it's quality, which should be determined by (as much

as possible) objective means. Choosing a random metric like "after the first

service pack" guarantees you'll get hit-and-miss quality software.

Friday, August 18, 2006

ejbKarmaCallback()

When you work with a noxious technology enough, it eventually comes back to bite you. Call it software development karma. While I was at OSCON in Portland, the first hotel room where I was placed had massive problems connecting to the Internet. It was wired access, so there was something related to my room that was causing the problem. I endured several maintenance guys and several phone calls with the actual provider. You all know the drill intimately.

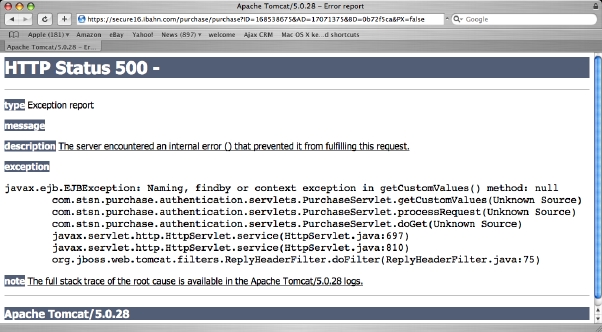

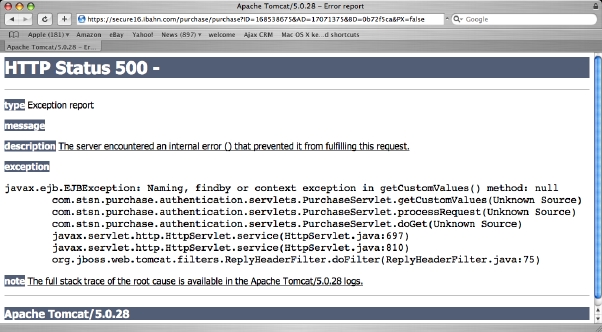

Anyway, at one point, it was declared "Fixed!", and I was instructed to point my faithful browser to the Internet. Lo and behold, Software Karma decreed that it was not to be. I got the following error, captured here in all its public glory.

Gaaaaaah! I now know waaaayyy more about their network infrastructure than I would like. They are using Tomcat and EJB's...to connect me to the Internet???!? I'm sure this is exactly the kind of application the EJB designers had in mind when they birthed this technology. Do we think that maybe this is total overkill? Couldn't the same be done with a simple web application backed by a database. Sigh. That's what I get for dabbling in evil -- sometimes it comes back to haunt you in the strangest places.

Anyway, at one point, it was declared "Fixed!", and I was instructed to point my faithful browser to the Internet. Lo and behold, Software Karma decreed that it was not to be. I got the following error, captured here in all its public glory.

Gaaaaaah! I now know waaaayyy more about their network infrastructure than I would like. They are using Tomcat and EJB's...to connect me to the Internet???!? I'm sure this is exactly the kind of application the EJB designers had in mind when they birthed this technology. Do we think that maybe this is total overkill? Couldn't the same be done with a simple web application backed by a database. Sigh. That's what I get for dabbling in evil -- sometimes it comes back to haunt you in the strangest places.

Sunday, August 13, 2006

Scumbag Spammers

If you have posted a comment to my blog lately, you've noticed that I've turned on the "Word Verification" feature of Blogspot. It's because of the scum of the earth, spammers. They've started posting spam comments (spamments?) to blogs. How clever. How annoying. How I hope they choke on their own vomit as they slide under a gas truck.

Saturday, August 12, 2006

Search Trumps Hiearchies

I wrote a while back about Pervasive Search, and how it changed the way I find things. I find myself using search more and more versus navigating hierarchies. As developers, we tend to create lots of files, in regular strict hierarchical structure (in fact, I've been blogging about namespaces vs. packages recently as well). File system paths are now too cumbersome to endure. Instead of walking through Explorer or the tree in my IDE, I'm using search.

I use search at 2 levels. Within the IDE, I use the brilliant feature in both IntelliJ and ReSharper to "Find File" (keyboard shortcut: Ctrl-N). This lets you type in the name (or partial) name of a file and open it in the editor. Better yet, it finds patterns of capital letters in names. So, if you are looking for the

The other place I've been using search a lot is the filesystem, when looking for either a file on which to perform some operation (like Subversion log) or looking for some content within a file. Google Desktop Search has gotten better and better. You can now invoke it with the key chord of hitting Ctrl twice. And, you can download a plug-in that allows you to search through any type of file you want, including program and XML documents. Once you've found the file in question, you can right-click on the search result and open the containing folder. This is the only way to get to some file buried deep in some package or directory structure. My coding pair and I have started using this heavily, and it has sped us up. And, it eliminates annoying repetitive tasks like digging through the rubble of the filesystem looking for a gold nugget.

I use search at 2 levels. Within the IDE, I use the brilliant feature in both IntelliJ and ReSharper to "Find File" (keyboard shortcut: Ctrl-N). This lets you type in the name (or partial) name of a file and open it in the editor. Better yet, it finds patterns of capital letters in names. So, if you are looking for the

ShoppingCartMemento class, you could type "SCM", and "Find File" will find it. Highly addictive. And, it works equally well in IntelliJ and Visual Studio with ReSharper (and my Eclipse friends tell me it has made it there as well).The other place I've been using search a lot is the filesystem, when looking for either a file on which to perform some operation (like Subversion log) or looking for some content within a file. Google Desktop Search has gotten better and better. You can now invoke it with the key chord of hitting Ctrl twice. And, you can download a plug-in that allows you to search through any type of file you want, including program and XML documents. Once you've found the file in question, you can right-click on the search result and open the containing folder. This is the only way to get to some file buried deep in some package or directory structure. My coding pair and I have started using this heavily, and it has sped us up. And, it eliminates annoying repetitive tasks like digging through the rubble of the filesystem looking for a gold nugget.

Thursday, August 03, 2006

Partial Classes

When I first saw that .NET 2 supported partial classes, I groaned. It

looked like a language feature that helps one thing and hurts a dozen

more, once people start abusing it. However, I've come around to appreciate (and dare I say it, like) partial classes. They are obviously useful for code generation (which is why, I suspect) they were added in the first place). However, they are also handy for other problems.

Testing is one place where partial classes offer a better solution than the one offered by Visual Studio.NET 2005. In VS.NET, if you want to use MS-Test to test a private method, the tool uses code generation (without partial classes) to create a public proxy method that turns around and calls the private method for you using reflection. This is not a big surprise; the JUnitX add-ins in Java help you do the same thing. But using code gen for this is a smell: if you change your private method using reflection, the generated code isn't smart enough to change, so you have to do code gen again, potentially overwriting some of the code you've added. Yuck.

Here's a better solution. I should add parenthetically that I don't usually bother testing private methods (especially if I have code coverage) because the public methods will exercise the private ones (otherwise, the private methods shouldn't be there). However, when doing TDD, I sometimes want to test a complext private method. And partial classes work great for this. The example I have here is a console application that does some number factoring (why isn't important in this context). I have a method

Rather than use code gen to test the method, I've made the

I like this because it allows me to test the private method without any messy code generation, reflection, or other smelly work-arounds. Partial classes make great test fixtures because they have access to the internal workings of the class but don't have to reside in the same file. It's dangerous to pile infrastructure on new features like this (especially scaffolding-type infrastructure like classes), but this one seems like a more elegant solution to the problem at hand than stacks of code generation.

looked like a language feature that helps one thing and hurts a dozen

more, once people start abusing it. However, I've come around to appreciate (and dare I say it, like) partial classes. They are obviously useful for code generation (which is why, I suspect) they were added in the first place). However, they are also handy for other problems.

Testing is one place where partial classes offer a better solution than the one offered by Visual Studio.NET 2005. In VS.NET, if you want to use MS-Test to test a private method, the tool uses code generation (without partial classes) to create a public proxy method that turns around and calls the private method for you using reflection. This is not a big surprise; the JUnitX add-ins in Java help you do the same thing. But using code gen for this is a smell: if you change your private method using reflection, the generated code isn't smart enough to change, so you have to do code gen again, potentially overwriting some of the code you've added. Yuck.

Here's a better solution. I should add parenthetically that I don't usually bother testing private methods (especially if I have code coverage) because the public methods will exercise the private ones (otherwise, the private methods shouldn't be there). However, when doing TDD, I sometimes want to test a complext private method. And partial classes work great for this. The example I have here is a console application that does some number factoring (why isn't important in this context). I have a method

theFactorsFor() that returns the factors for an integer. Here is the PerfectNumberFinder class, including the method in question:namespace PerfectNumbers {

internal partial class PerfectNumberFinder {

public void executePerfectNumbers() {

for (int i = 2; i < 500; i++) {

Console.WriteLine(i);

if (isPerfect(i))

Console.WriteLine("{0} is perfect", i);

}

}

private int[] theFactorsFor(int number) {

int sqrt = (int) Math.Sqrt(number) + 1;

List<int> factors = new List<int>(5);

factors.Add(1);

factors.Add(number);

for (int i = 2; i <= sqrt; i++)

if (number % i == 0) {

if (! factors.Contains(i))

factors.Add(i);

if (!factors.Contains(number/i))

factors.Add(number/i);

}

factors.Sort();

return factors.ToArray();

}

private bool isPerfect(int number) {

return number == sumOf(theFactorsFor(number)) - number;

}

private int sumOf(int[] factors) {

int sum = 0;

foreach (int i in factors)

sum += i;

return sum;

}

}

}

Rather than use code gen to test the method, I've made the

PerfectNumberFinder class a partial class. The other part of the partial is the NUnit TestFixture, shown here:

namespace PerfectNumbers {

[TestFixture]

internal partial class PerfectNumberFinder {

[Test]

public void Get_factors_for_number() {

int[] actual;

Dictionary<int, int[]> expected =

new Dictionary<int, int[]>();

expected.Add(3, new int[] {1, 3});

expected.Add(6, new int[] {1, 2, 3, 6});

expected.Add(8, new int[] {1, 2, 4, 8});

expected.Add(16, new int[] {1, 2, 4, 8, 16});

expected.Add(24, new int[] {1, 2, 3, 4, 6, 8, 12, 24});

foreach (int f in expected.Keys) {

actual = theFactorsFor(f);

for (int i = 0; i < expected[f].Length; i++)

Assert.AreEqual(expected[f][i], actual[i],

"Expected not equal");

}

}

}

}

I like this because it allows me to test the private method without any messy code generation, reflection, or other smelly work-arounds. Partial classes make great test fixtures because they have access to the internal workings of the class but don't have to reside in the same file. It's dangerous to pile infrastructure on new features like this (especially scaffolding-type infrastructure like classes), but this one seems like a more elegant solution to the problem at hand than stacks of code generation.

Tuesday, August 01, 2006

Pontificating at OSCON

I gave a talk as OSCON last week on Building Internal DSLs in Ruby. Apparently, there is a fair amount of interest in this subject: I was in one of the small rooms, but it was packed to the rafters, with standing room only along the back and side walls. I didn't realize it, but John Lam took a snapshot of me in action and posted it to his blog:  .

.

It's tough to get a good shot while someone is talking, so it shows that John is both a formidable Ruby/.NET guy and a talented photographer!

.

. It's tough to get a good shot while someone is talking, so it shows that John is both a formidable Ruby/.NET guy and a talented photographer!

The Fact of the JMatter

Several years ago, some brilliant designers created Naked Objects, a Java framework that generates applications from domain objects. You supply the POJOs with behavior, point Naked Object at them, and you have a full-blown Swing application that allows you to edit, insert, delete, and browse the objects and their relationships. You could literally create sparse, functional applications in minutes. However, Naked Objects never got much beyond a proof of concept. The automatically generated applications were utilitarian but uninspiring.

Fast forward to now. Eitan Suez, one of my fellow No Fluff, Just Stuff

speakers, has taken the Naked Object idea and run with it. He has created the JMatter framework (found here). It takes the concepts of Naked Objects and updates it to the here and now. JMatter applications still auto-generate from POJOs, but the user interface and interactions are very rich. The sample application that appears on the JMatter web site literally took less than 2 hours to create; written by hand, it equates to developer-weeks worth of effort. It also illustrates a growing trend in development: creating framework and scaffolding code automatically, freeing developers to focus more on producing applications. We've seen this approach done well in Ruby on Rails. JMatter shows that you can apply the same concepts to Swing development. Eitan has released JMatter with a MySQL-style license, so it's worth jumping over to his site to get a preview of the future.

Fast forward to now. Eitan Suez, one of my fellow No Fluff, Just Stuff

speakers, has taken the Naked Object idea and run with it. He has created the JMatter framework (found here). It takes the concepts of Naked Objects and updates it to the here and now. JMatter applications still auto-generate from POJOs, but the user interface and interactions are very rich. The sample application that appears on the JMatter web site literally took less than 2 hours to create; written by hand, it equates to developer-weeks worth of effort. It also illustrates a growing trend in development: creating framework and scaffolding code automatically, freeing developers to focus more on producing applications. We've seen this approach done well in Ruby on Rails. JMatter shows that you can apply the same concepts to Swing development. Eitan has released JMatter with a MySQL-style license, so it's worth jumping over to his site to get a preview of the future.

Subscribe to:

Posts (Atom)